ScyllaNet vs. YOLOv8: Evaluating Performance and Capabilities

Zhora Gevorgyan

Lead Computer Vision Engineer

In the rapidly evolving field of computer vision, different systems and models are developed to tackle various tasks such as object detection, tracking, and recognition. This comparison aims to provide insights into the accuracy and hardware usage of these two architectures.

Everyone is talking about ChatGPT and how cool it is, with claims that it can replace many jobs. However, in real-life applications such as object detection from CCTV cameras, we face the significant challenge of not missing objects while also avoiding false positives. While there is much excitement around large language models (LLMs) and their potential to take over many tasks, a crucial factor in their success is the enormous amount of data they are trained on. Take, for example, the evolution from YOLOv3 to YOLOv8. YOLOv3 achieved a 33% mAP on the COCO validation set, which was impressive at the time. In contrast, YOLOv8 has significantly improved performance metrics, with mAP values surpassing 53.9% on the same dataset, showcasing how advancements in architecture and optimization techniques have driven substantial improvements in detection accuracy. This demonstrates a deep dive into the advancements of deep learning and AI, where improvements are achieved through better architectures and algorithms rather than just more data.

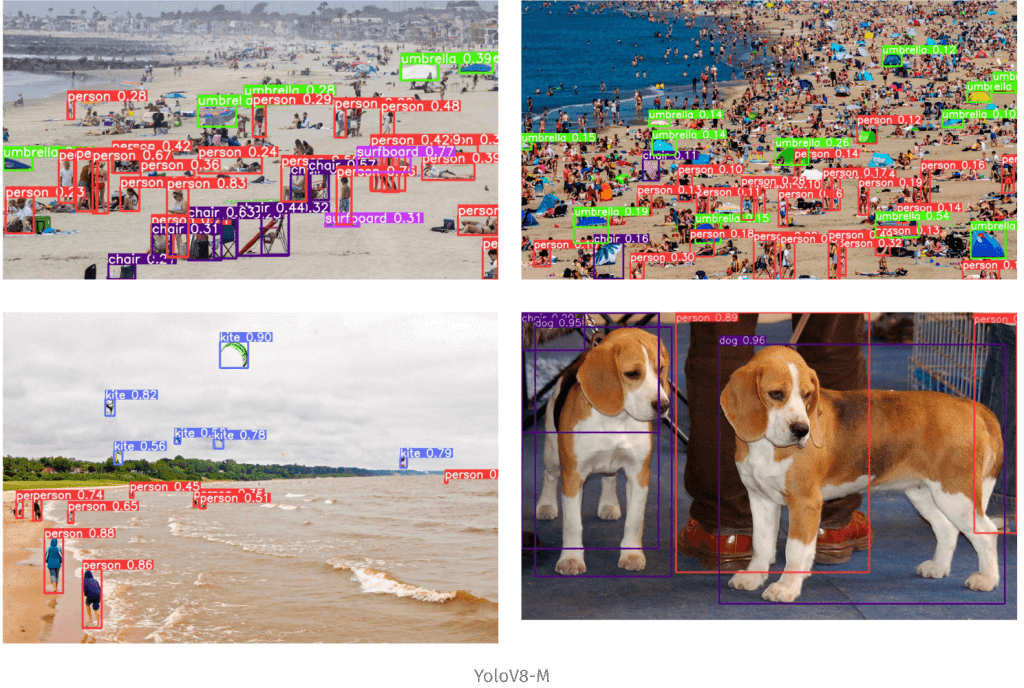

Let's dive into how YOLOv8, which in my opinion is currently the best open-source architecture, performs on long-distance, not-so-visible images with many objects. You may wonder why I am not focusing on close-range, clear, and shiny images. Well, no one cares if you can identify the obvious.

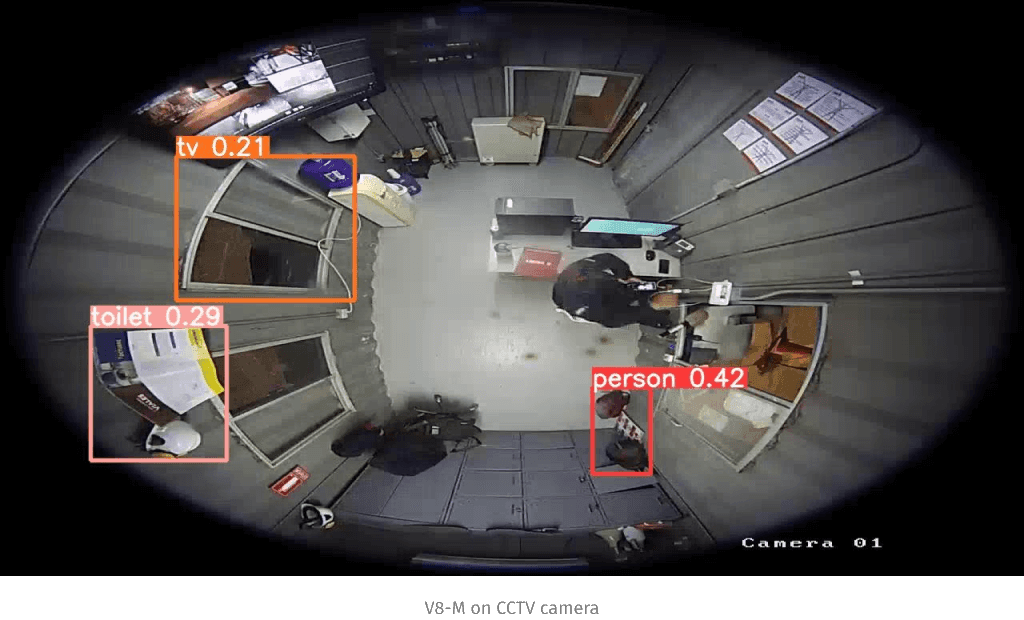

In the second image, I lowered the detection threshold from the conventional 0.2 to 0.1 to obtain more detections because there were initially only 3–4 detections from the whole crowd. And this is from one of the best computer vision architectures in the world. Apparently, even a 3-year-old child can more precisely identify objects in these images. This highlights a significant limitation, especially considering that these are not even the poor-quality CCTV images we often have to deal with, i.e., captured in infrared or under varying angles and lighting conditions. Speaking of angles, look at how YOLOv8-M performs on the following top-down fish eye camera example.

These limitations of one of the best object detection architectures should make us think twice when discussing modern AI and comparing it to human intelligence. We should consider what benchmark datasets are conventionally used. Are they biased? Do objects in the images cover all possible angles and views? Do they cover different sizes, qualities, illumination, and color deviations we can observe in real-life situations? And here I pose a relevant question: can the model architecture cover some of the dataset drawbacks? In other words, can we come up with improved model architecture and training approaches to have a “smarter” and more capable model on the same dataset? Well, my experience says we can. Below, I will show the comparison of ScyllaNet model with YOLO and briefly highlight some of the improvements that resulted in the improvements we observe.

Currently, I chose to compare ScyllaNet-S size of the model with YOLOv8-M. Note that ScyllaNet-S is much faster than YOLOv8-M, achieving an inference speed of 0.87 ms per frame using FP32, compared to YOLOv8-M's 1.83 ms per frame using FP16.

SIoU Loss: More Powerful Learning for Bounding Box Regression

Our research is suggesting a new loss function SIoU that has proven its effectiveness not only in a number of simulations and tests but also in production.

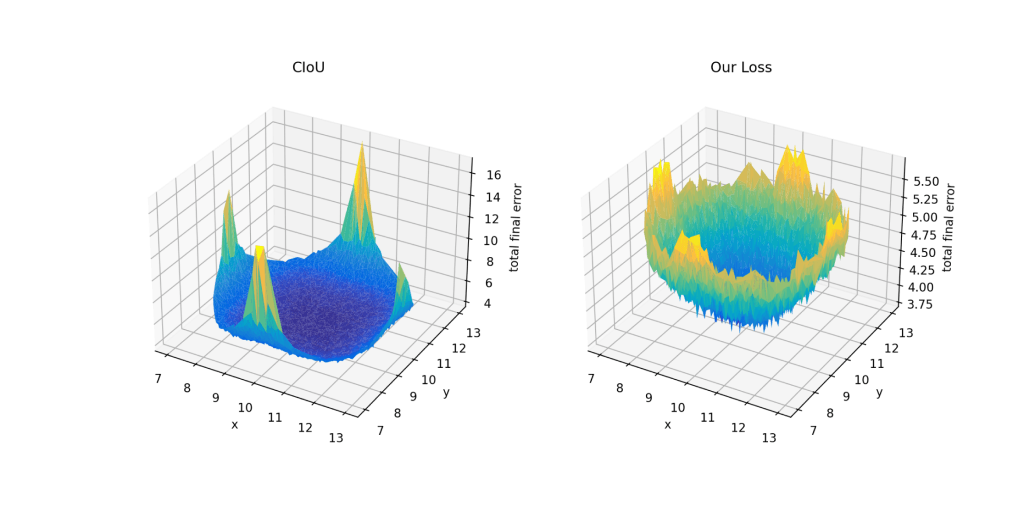

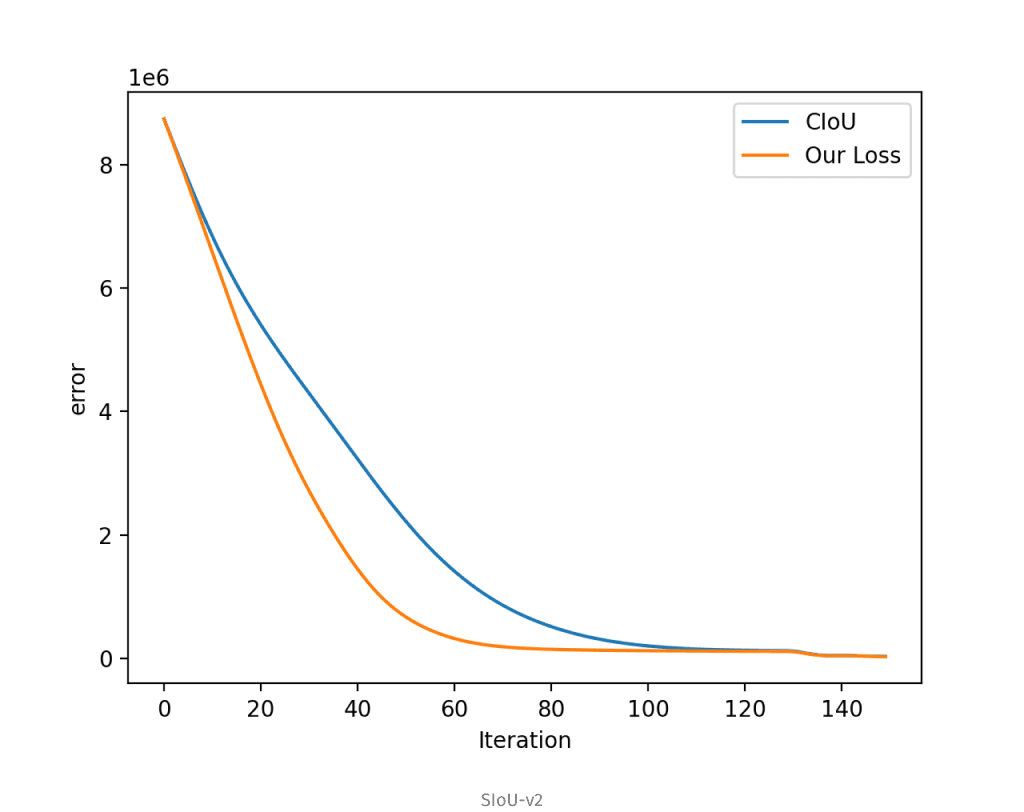

Read moreThe current ScyllaNet model has an improved architecture. I also updated the SIoU loss, which was previously asymmetric. After the update, I conducted the simulation experiment as before, but this time for about 150 epochs. Below are plots of comparison for conventionally used CIoU loss and SIoU loss, developed by us.

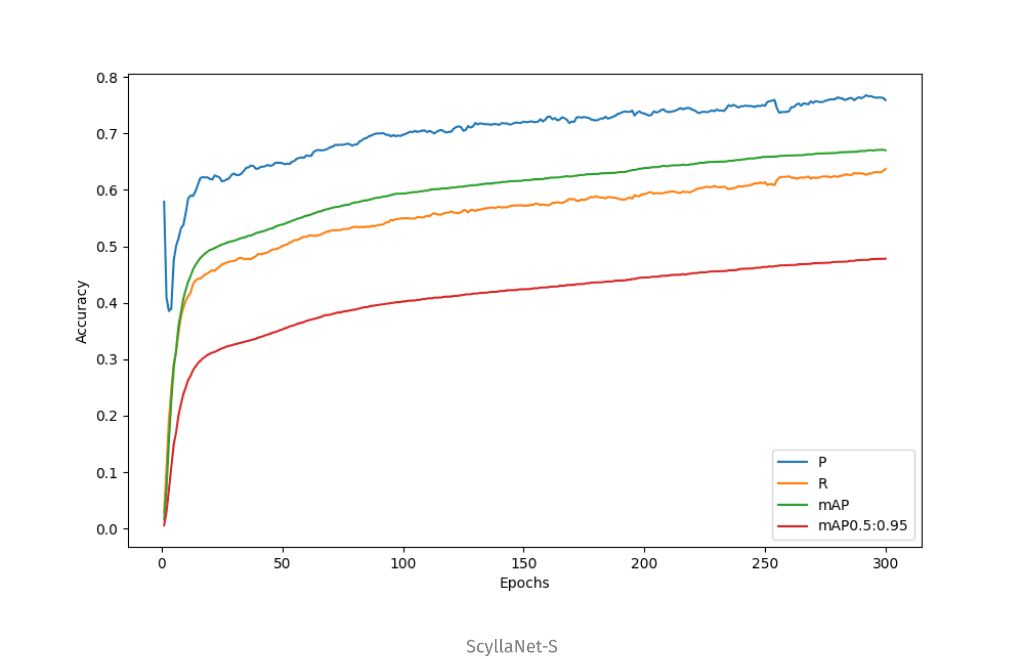

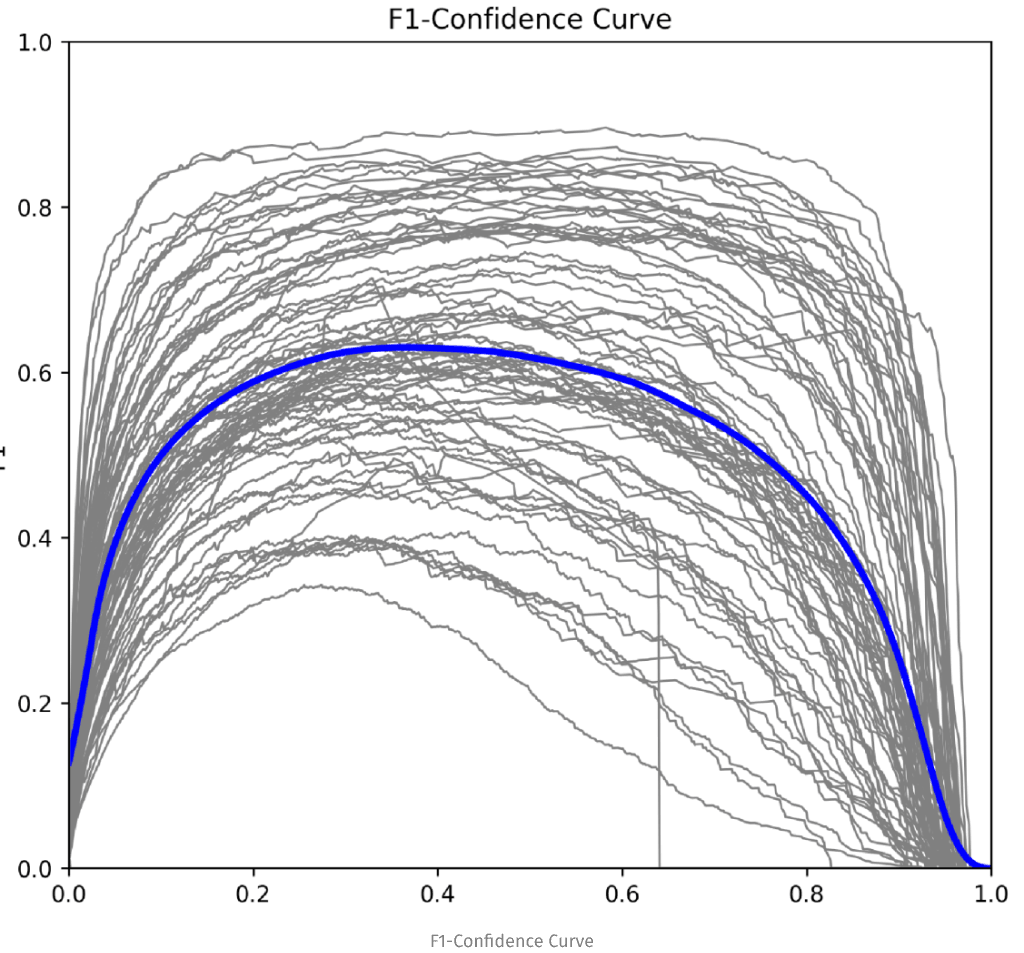

As you can see above, the error distribution from simulations resembles more of a symmetrical paraboloid than before, indicating that it will optimize each box similarly from every angle. Except for updating the loss and architecture, we also updated our model optimizer. Previously, we employed a genetic algorithm, which converged effectively but was somewhat slow. Instead, we are now using the Tree-structured Parzen Estimator. This algorithm builds a probabilistic model of the objective function and iteratively improves this model to focus the search on promising regions of the hyperparameter space. Here are the graphs depicting the development of metrics through the training epochs and the F1 confidence curves.

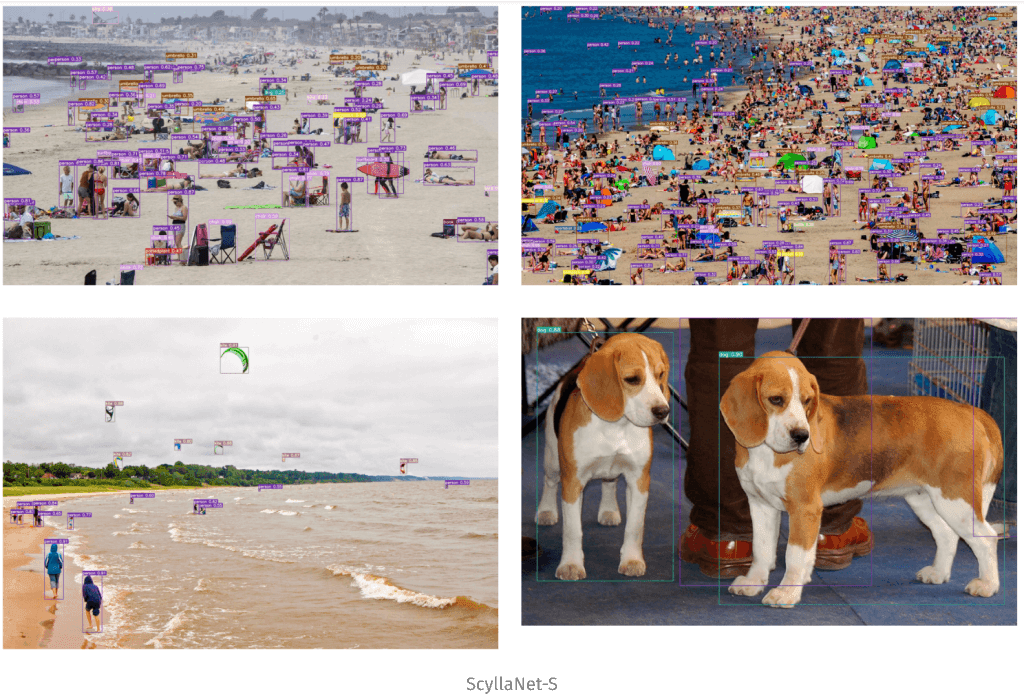

Let’s check detections produced with ScyllaNet-S using our latest trained model.

Clearly, there are significant improvements over the YOLOv8-M: more objects detected in the crowd, many smaller objects correctly identified, and correct bounding boxes in the last image.

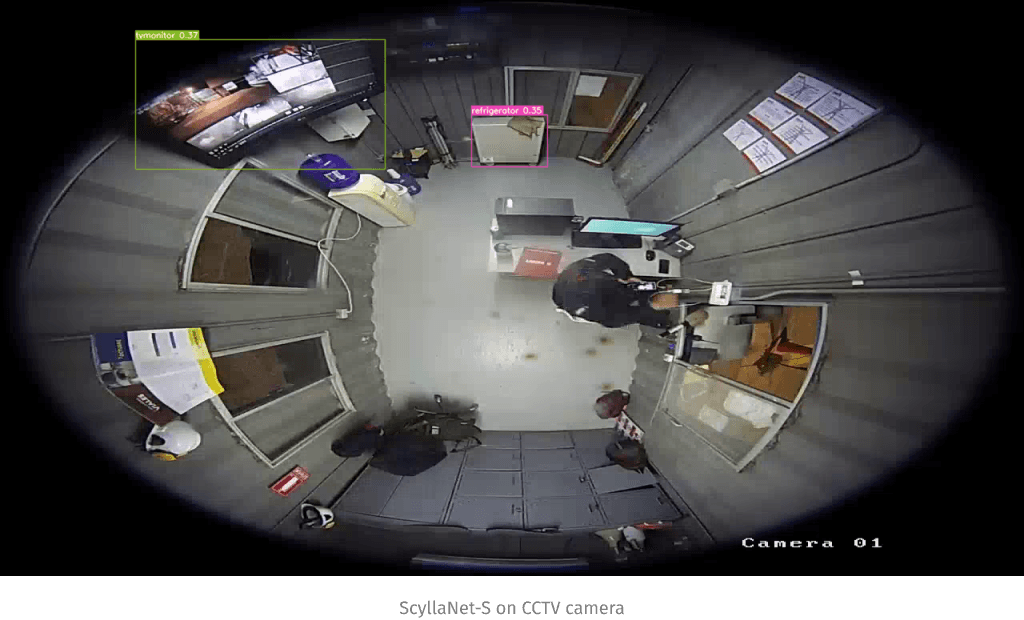

Finally, let's see how our solution will perform on a 'challenging' image from a fish eye camera.

Clearly, better. Yet it is not perfect. The person is not detected, indicating that perhaps it is challenging to develop a model capable of detecting this person solely using the COCO dataset. However, the path we have taken so far highlights that the model architecture is definitely key in this field. At Scylla, our goal is not only to collect data but also to create the best possible models and training routines to learn from that data.

Stay up to date with all of new stories

Scylla Technologies Inc needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.

Related materials

Deploying Facial Recognition Technology at the Enterprise Level

Learn how face recognition deployment types work and what benefits and drawbacks edge/hybrid cloud, centralized server and clustered architectures can offer.

Read more

How AI Video Analytics Helps Reduce False Alarms

Learn how to minimize the number of false alarms to enable monitoring centers improve productivity, optimize resources and reduce costs.

Read more

SIoU Loss: More Powerful Learning for Bounding Box Regression

Our research is suggesting a new loss function SIoU that has proven its effectiveness not only in a number of simulations and tests but also in production.

Read more