The Impact of Large Datasets on ScyllaNet-S Performance

Zhora Gevorgyan

Lead Computer Vision Engineer

In the ongoing journey to enhance object detection models, one crucial aspect often highlighted is the role of data. The significance of training datasets in improving model performance cannot be overstated. Recently, I trained ScyllaNet-S on a larger and more diverse dataset, and the results were remarkable. This article aims to demonstrate how the size and quality of datasets influence the performance of ScyllaNet-S, highlighting the improvements observed.

The role of data in model training

Large and diverse datasets provide a richer variety of scenarios, which helps models learn to generalize better. When models are exposed to a wide range of conditions, such as different lighting, angles, and object sizes, they become more robust and capable of handling real-world situations more effectively. Let's remember the 'challenging' image from the previous article, which was not detected by either our model or YOLO. This might be because of the limitations of the COCO dataset.

Training ScyllaNet-S on a large dataset

Dataset characteristics The new dataset used for training ScyllaNet-S included:

● Images captured under various lighting conditions

● Diverse angles and perspectives

● Different resolutions and qualities

● Scenarios with varying object densities

This dataset aimed to cover a broad spectrum of real-life scenarios, providing a comprehensive training ground for ScyllaNet-S.

Training process The training process was extensive, running for approximately 400 epochs. The model's architecture and loss functions were kept consistent with ScyllaNet-S trained on COCO dataset to isolate the impact of the dataset size and diversity.

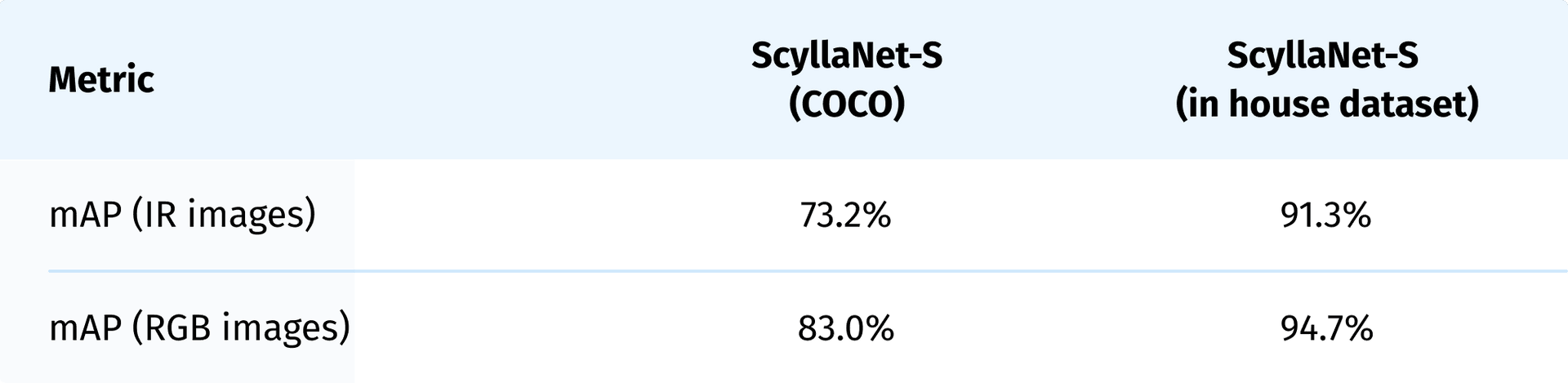

Performance improvements The results from training ScyllaNet-S on the larger dataset were significant. Here are some key performance metrics and observations.

● Increased detection accuracy The model showed a marked improvement in mean Average Precision (mAP), particularly in challenging scenarios such as crowded scenes and low-visibility conditions. The increased variety in the training data allowed ScyllaNet-S to learn more robust features, resulting in higher detection accuracy.

● Enhanced robustness to different conditions The model's ability to handle varying lighting conditions, angles, and object sizes improved significantly. For instance, in low-light images, ScyllaNet-S was able to maintain a high level of accuracy, a testament to the diverse lighting conditions included in the training dataset.

● Better generalization ScyllaNet-S demonstrated better generalization capabilities, performing well on unseen data that differed from the training set. This was particularly evident in images with unique perspectives and object arrangements.

SIoU Loss: More Powerful Learning for Bounding Box Regression

Our research is suggesting a new loss function SIoU that has proven its effectiveness not only in a number of simulations and tests but also in production.

Read moreComparison with previous training To illustrate the impact of the larger dataset, let's compare the performance of ScyllaNet-S before and after the dataset expansion.

mAP comparison on a custom dataset

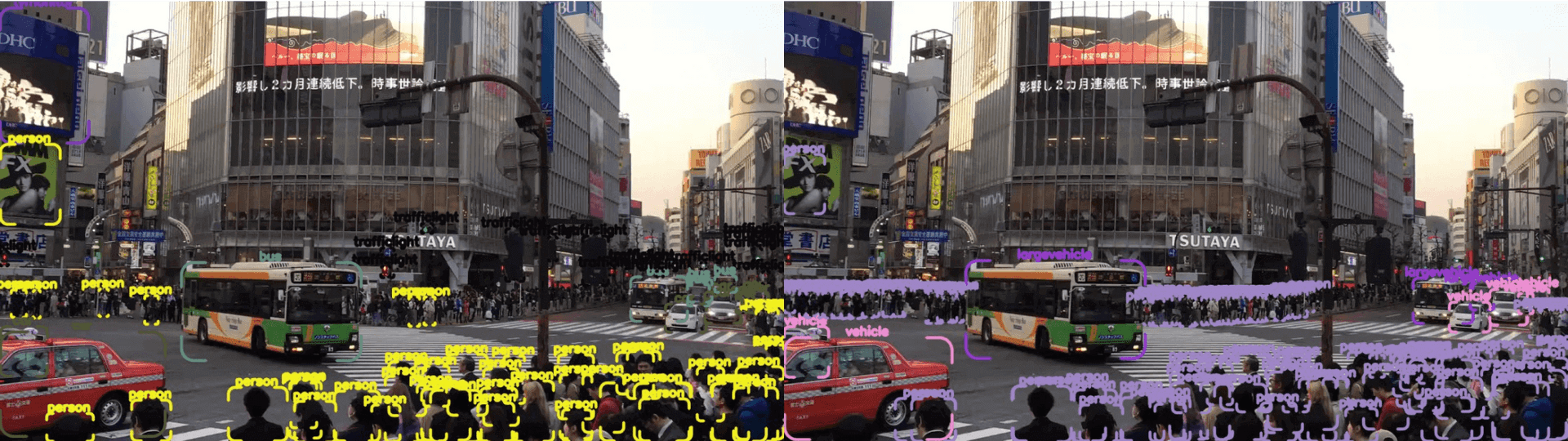

Detection examples

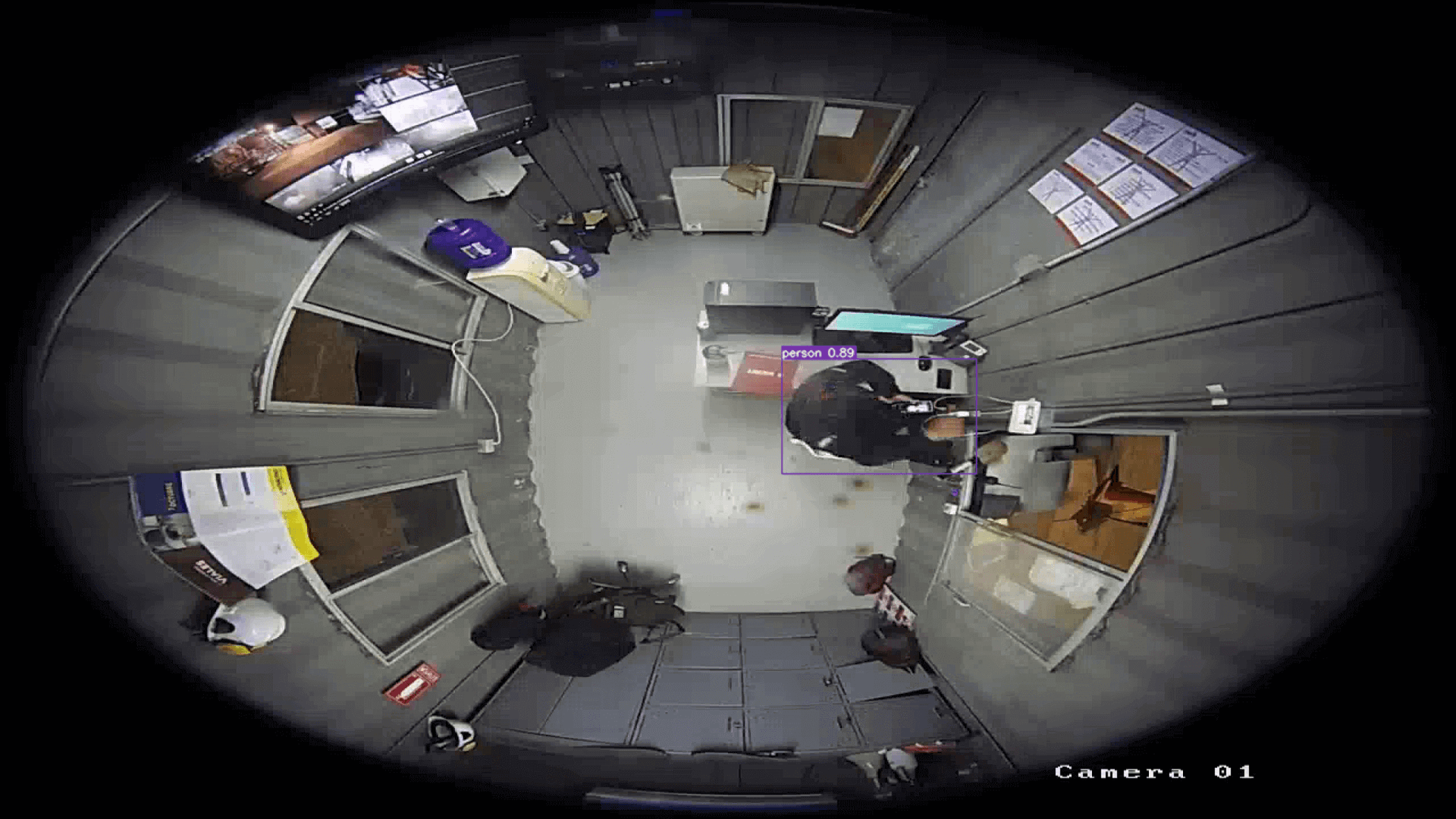

First, let's check the 'challenging' image from the previous article.

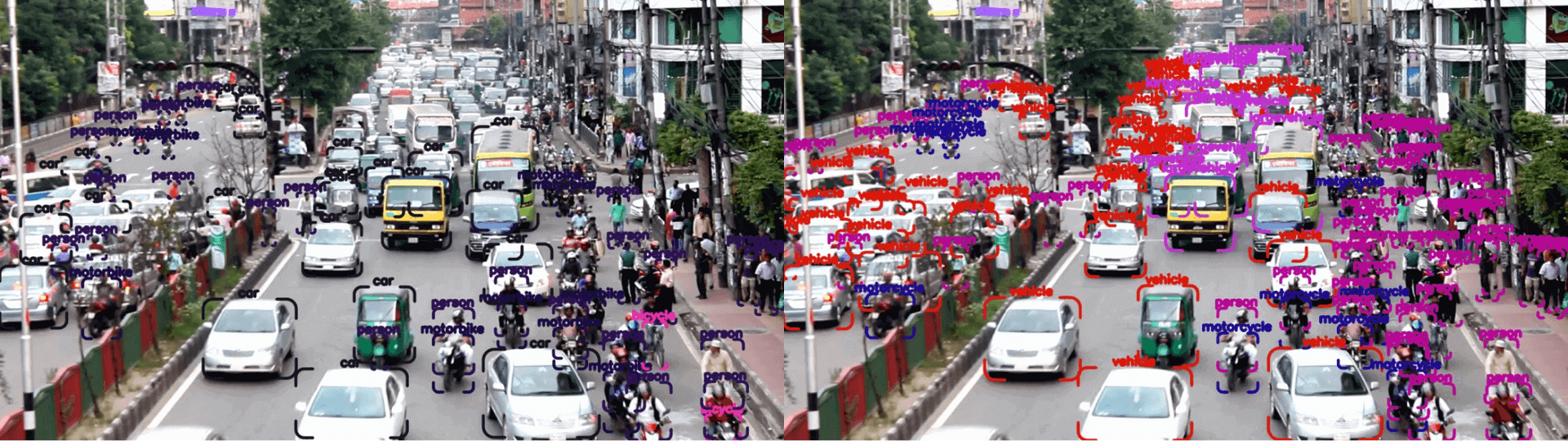

As you can see, the person can now be detected, meaning that the quality of data affects performance. Let's move forward and check a couple of examples from different traffic environments.

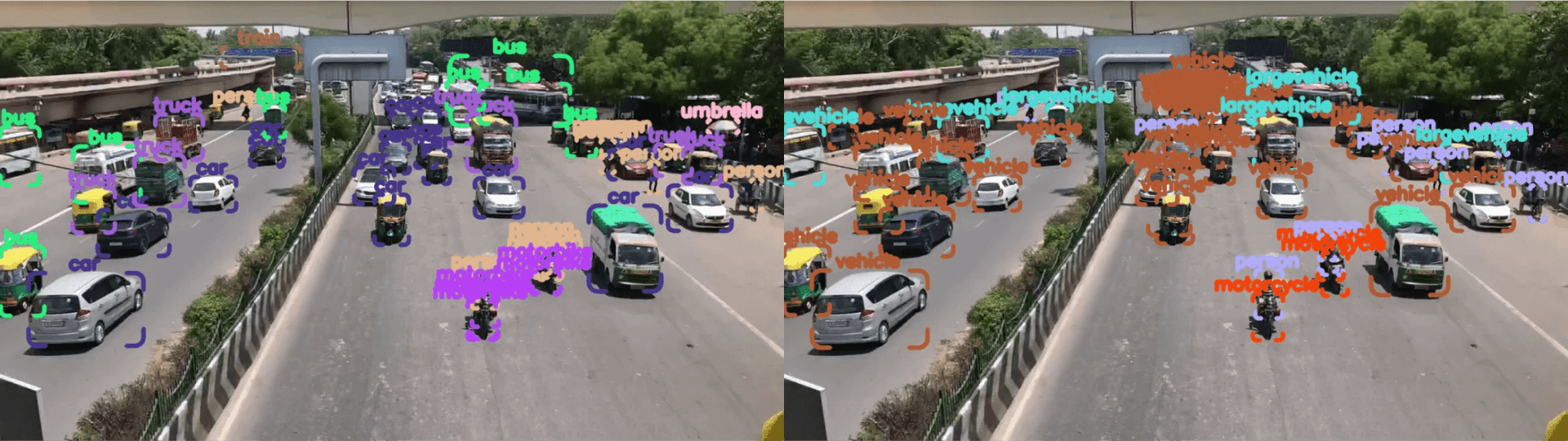

In cases like IR, the detections on our dataset are more precise than when using the COCO dataset because the COCO dataset contains very little data for such scenarios (if it contains any at all). Cases like those shown below are too challenging for a model trained on the COCO dataset. That's why we will move forward and not compare them, as such a comparison does not make sense.

Conclusion

Training ScyllaNet-S on a larger, more diverse dataset has shown just how important data is for improving model performance. We've seen better detection accuracy, more robustness in different conditions, and improved generalization. This proves that using large and varied datasets can greatly enhance object detection models. As we continue to develop, focusing on both advanced architectures and rich datasets will help us build even smarter and more capable models.

Stay up to date with all of new stories

Scylla Technologies Inc needs the contact information you provide to us to contact you about our products and services. You may unsubscribe from these communications at any time. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, please review our Privacy Policy.

Related materials

ScyllaNet vs. YOLOv8: Evaluating Performance and Capabilities

In the new article from Zhora Gevorgyan, Lead Computer Vision Engineer, learn how the ground-breaking ScyllaNet compares against YOLOv8.

Read more

How AI Video Analytics Helps Reduce False Alarms

Learn how to minimize the number of false alarms to enable monitoring centers improve productivity, optimize resources and reduce costs.

Read more

SIoU Loss: More Powerful Learning for Bounding Box Regression

Our research is suggesting a new loss function SIoU that has proven its effectiveness not only in a number of simulations and tests but also in production.

Read more